The importance of sensor calibration for object detection

- Jul 5, 2021

- 2 min read

Updated: Jul 8, 2021

Sensors are eye into the world for autonomous systems. They use the sensor data to navigate and understand their surrounding environment. Algorithms are developed to perform object detection, measured distance to the objects relative to the mobile robot. The computed information is used to control the robot and it is therefore highly responsible for the information to have high quality. Especially in safety-critical applications wrong or inaccurate distance information can have catastrophic consequences as the autonomous system might not be reacting properly.

But one might ask what the accuracy and reliability those algorithms rely on. Using multiple sensors increases the confidence of the object detection algorithms. But when using multiple of these, they have to be calibrated as accurately as possible in order to obtain the best possible object detection and reliable distance computation.

In the following section we provide an industry-relevant example of the impact of extrinsic sensor calibration on object detection and distance measurements:

Lidar to camera calibration

Estimation of the transformation between a Lidar and a camera sensor for data fusion and association. „Where is the camera in respect to the Lidar and vice versa“.

Rotation and translation between Lidar and Camera

A correctly calibrated sensor setup is necessary for your computer vision application and can have a major influence on the accuracy of your results.

Application: Object (Car) detection in 3D

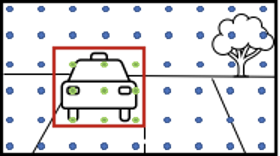

1. 2D Detection in Camera

→ Bounding box (in 2d camera coordinates)

2. Match 3D Lidar points with bounding box

→ 3D Position (in 3d world coordinates)

*assuming average car width of 1.8m and a Lidar with a horizontal resolution of 0.35°

Calibration Influence on Accuracy

If the sensor is calibrated properly, we can correctly identify the four green dots belonging to the car detected in the camera and we know there is a car 100m ahead of us.

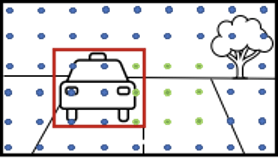

Assuming the horizontal rotation between camera and Lidar is only miscalibrated by 0.70° the result looks like the following.

Now 2/3 of the Lidar points assigned to the car bounding box have a “wrong” depth which will result in a wrong depth/distance estimate.

*assuming average car width of 1.8m and a Lidar with a horizontal resolution of 0.35°

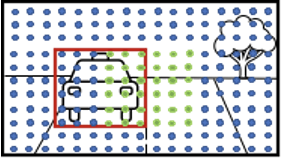

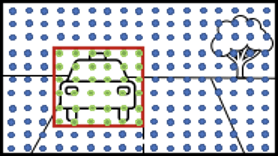

If the car upfront is 50m away (instead of 100m), the Lidar points on the car form approximately a 6x6 grid.

We assume to be able to compute the correct distance of the car if at least half of the associated points reflect the car's correct distance.

In this case, false detections start at a miscalibration of only 1,05° (3 times the horizontal resolution, leading to half of the points being “wrongly” associated points within the detected bounding box).

*assuming average car width of 1.8m and a Lidar with a horizontal resolution of 0.35°

This is why we at IVISO are on a mission the build the best possible sensor calibration tools for the industry enabling fast and accurate extrinsic and intrinsic calibration of diverse sensors used to automated mobile machines. Based on our multiple years of industry projects we have developed Camcalib – a tool for sensor calibration.

Do you want to find out how we solve this problem? Then click on the link below link to camcalib homepage

Author:

Nicolas Thorstensen

Thomas Wolf

Comments